In my situation, I use Sorawhich have been being developed by Shiguredo.

Concisely, This libraly is libwebRTC wrapper.

What I want to implement

Capturing camera preview and send it to server simultaneously.

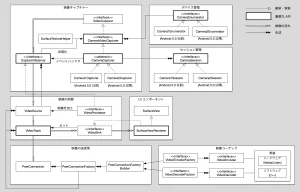

Architecture

the following image very helps you to develop architecture of webRTC in your Android Application

Thanks to reference

https://medium.com/shiguredo/libwebrtc-android-%E3%81%AE%E3%82%AB%E3%83%A1%E3%83%A9-api-6ca7d804ec87

Points

- SurfaceViewRenderer

You have to intialize it before capturing preview or you can't show preview on your device's screen.

surfaceViewRenderer!!.init(egl!!.eglBaseContext, null)- Capturer

Once you execute 'startCapturer()' you can capture your screen and send preview to server. Device names mean camera types like front and back.

val enumerator = Camera2Enumerator(activity)

val deviceNames = enumerator.deviceNames

capturer = enumerator.createCapturer(deviceNames[1], null)-Add sink your SurfaceVIewRenderer to the track

In my own case, I can retrieve MediaStreams (bundle multiple tracks like audio, video) by being called event handler.

override fun onAddLocalStream(mediaChannel: SoraMediaChannel, ms: MediaStream) {

surfaceViewRenderer?.apply {

capturer?.startCapture(

this.width,

this.height,

30

)

ms.videoTracks[0].addSink(surfaceViewRenderer)

}

}

Hope these tips help your develop of webRTC :>

コメント